Probabilistic Topics

Key to the understanding of topic modeling as a whole, and specifically to Latent Dirichlet Allocation (LDA) is the principle that topics are collections of words that occur with a certain frequency.

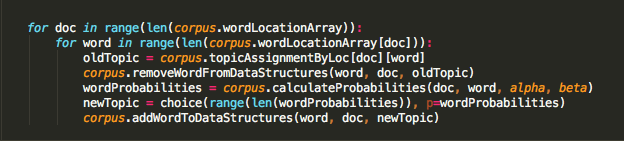

Topics in a text modeled with LDA are initially assigned in a random fashion. From there, LDA iteratively reassigns topics to each word in turn based on a variety of factors. These include the co-occurrence of this word with others in the text, the topic make-up of the document containing the word, and hyperparameters alpha and beta that allow topics to assimilate new words and documents to assimilate new topics, respectively..

Evaluating Topic Models

Since LDA is unsupervised, it is vital to devise criteria by which to evaluate topic models.

- Are topics distinct in their word distribution?

- Are documents distinct in their topic distribution?

- Are more specific topics localized to a few documents?

- Are more general topics prevalent as smaller proportions of more documents?

- Are topics generally irreducible into smaller, unrelated topics?