Experiment

Experimental Procedure

Intro and Methods

We conducted an experiment to evaluate the effectiveness of the mass feedback and interactivity of Practicum. Specifically, we compared Practicum with a worksheet version of Practicum. We selected a few activities from objects and classes in Practicum and provided them to the students for this experiment. The worksheet had the same set of problems with similar guidance and activities as Practicum. However, the amount of feedback and interactivity was much less than Practicum.

We recruited current CS111 and previous CS111 students to participate in our research. We randomly assigned students to either the experimental group, which used Practicum to study, or the control group, which used the worksheets to study. The students were asked to complete an 8 minute test first to measure their understanding of classes and objects prior to the experiment. Then they were split into their groups to complete a 30 minute study session with either Practicum or the worksheets. Upon completing the study session, the students were asked to complete an 8 minute test again to measure their improvement after the study session. The first and second test was identical and we compared the improvement of test scores between the experimental group and control group to see if we had any significant differences.

In addition to the procedures above, we had students fill out surveys to collect data about some potential confounding variables we identified prior to the experiment.

Results

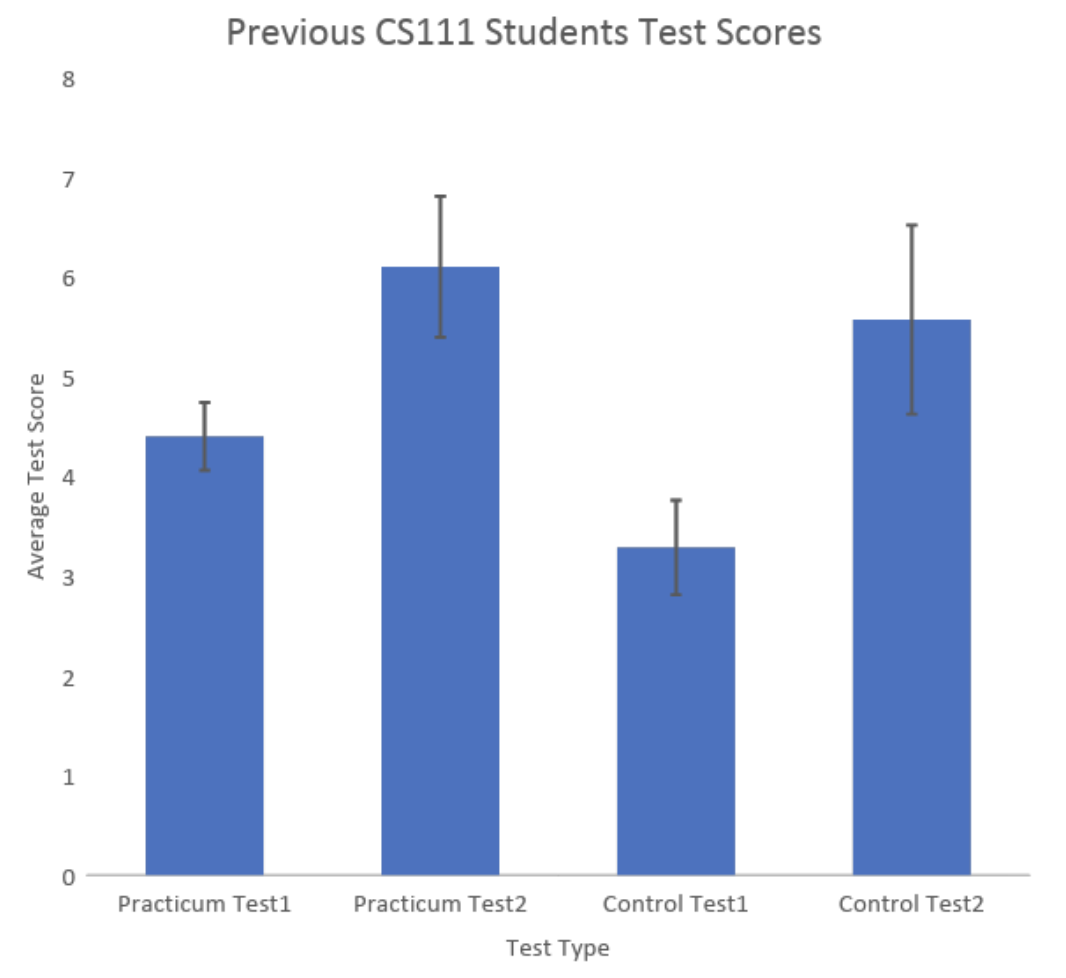

When comparing the overall results, we found no significant difference between the improvement of test scores between the experimental group and the control group (p > 0.05).

However, when we compared the data from just the students currently enrolled in CS111, we found that the students that used Practicum to study showed a significantly greater improvement in their second test compared to the first test (p < 0.05).

On the other hand, we found no significant differences in the test scores of students who had already completed CS111 prior to the experiment (p > 0.05).

We found no significant differences between the two groups for any of the confounding variables we collected.

Discussion

The data suggests that the mass feedback and interactivity of Practicum may be more effective for students that are in the initial stages of learning. This makes sense since it is easy to imagine that a step-by-step walk through of a code would be more useful for students that have just learned a new concept. On the other hand, students who already have a good understanding of the concept may find a step-by-step walk through of a code less useful. From the data analysis, we believe that Practicum has the potential to be an effective study tool for students. However, there are some limitations to the study. First of all, the sample size of the data was relatively small with only a total of 44 participants. Also, our study did not reflect any real life situations. In our experiment, we compared Practicum to a worksheet we created which was not similar to any of the resources students would have access to in the real world. Furthermore, the students’ improvement was measured by tests created by ourselves. In an ideal world, we would get data from real class grades. Future research could address these limitations to further reveal the full potential of Practicum as a study tool.